From Hypothetical Risk to Materialized Threat

A finance worker at a multinational firm was invited to a video conference with people he believed were his colleagues. The group, supposedly including his UK-based CFO, wanted to discuss an urgent, secret transaction. Following what appeared to be the CFO’s instructions, the worker initiated a series of transfers totaling $25 million. However, every individual on that call, including the CFO, was an AI-generated deepfake. This incident was highlighted in the last ENISA Threat Landscape report. It is not a fictional scenario but a stark illustration of new cybersecurity challenges. The rise of generative AI has democratized sophisticated attack tools, making AI Deepfake Threats one of the most significant and rapidly evolving challenges.

The threat posed by AI-generated synthetic media is no longer theoretical; it is actively costing companies substantial sums. While technology offers unprecedented opportunities for innovation and efficiency, it also equips adversaries with powerful capabilities for deception and manipulation. This article will explore the latest data on AI Deepfake Threats, break down the most common attack vectors currently being observed, and provide a comprehensive framework for defending your organization. Building a resilient defense requires more than just technology. It also demands robust processes and a well-informed human element.

The Evolving Threat Landscape: What the Reports Reveal

Cybersecurity reports from paint a clear and concerning picture of the AI-driven threat landscape, showing a marked escalation in the sophistication and frequency of attacks leveraging artificial intelligence. These threats have moved from the periphery to become mainstream concerns for organizations across all sectors. Building a resilient defense requires more than just technology. It also demands robust processes and a well-informed human element.An alarming 30% of respondents cited this type of attack. This represents a significant increase, indicating that threat actors are not just experimenting with this technology but are deploying it effectively and successfully.

The report underscores a critical trend: AI-powered attacks, like advanced phishing, are exploiting gaps in traditional security. Many organizations are struggling to address these vulnerabilities Furthermore, the Verizon Data Breach Investigations Report (DBIR) (assuming a DBIR is available and referenced; if the input meant the next DBIR as Verizon typically releases them mid-year, the phrasing would need adjustment upon its release – for now, using the last report as per current knowledge of typical cycles) reveals that the use of synthetically generated text in malicious emails has significantly increased. This trend points to a future where distinguishing between a legitimate email and a highly personalized, AI-crafted phishing attempt becomes nearly impossible for the untrained eye. Adversaries use tools like FraudGPT and other large language models (LLMs) to write convincing scam emails and generate malicious code. ENISA notes this allows them to launch campaigns at a previously unimaginable scale and level of personalization.

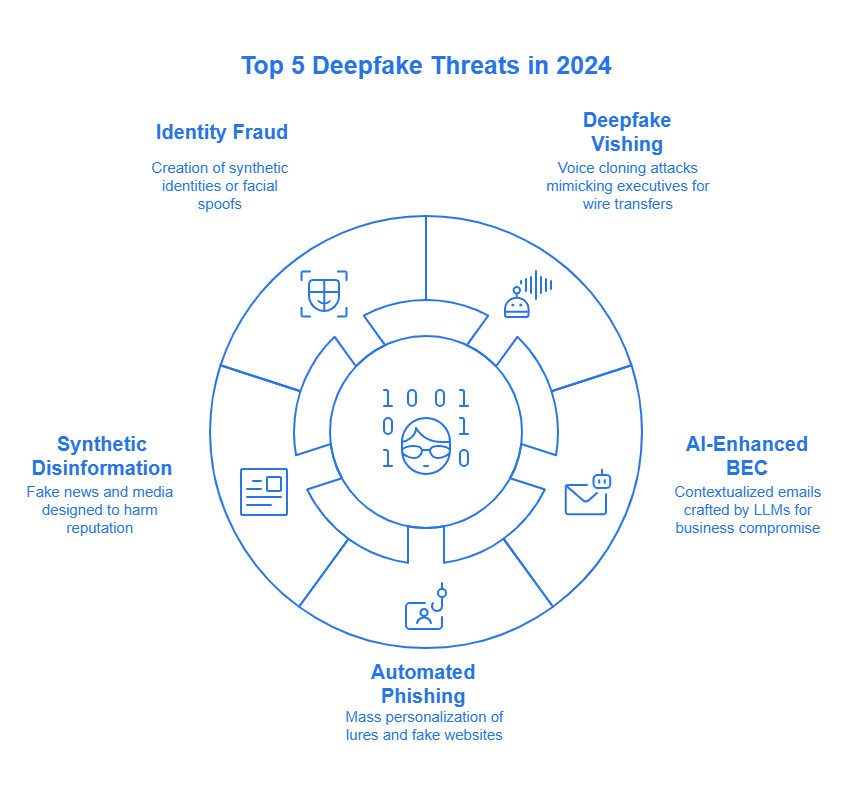

Top 5 AI Deepfake Threats Your Organization Faces Today

While the underlying technology is complex, the ways threat actors utilize AI and deepfakes often represent sophisticated extensions of classic attack methods. Understanding these vectors is the first step toward building an effective defense. Here are some of the most prominent AI Deepfake Threats currently observed:

Deepfake Vishing (Voice Cloning): Attackers use AI to clone the voice of a trusted executive, such as a CEO or CFO. Scammers use this cloned voice in phone calls or voicemails. They use it to authorize fraudulent wire transfers, change payment details, or trick employees into revealing sensitive credentials. Creating a convincing replica may only require a few seconds of a person’s audio, often sourced from public appearances or social media.

Advanced Business Email Compromise (BEC): Generative AI has significantly enhanced BEC attacks. Threat actors now use LLMs to craft flawless, context-aware emails that convincingly mimic an executive’s writing style and reference internal projects or recent events, making them incredibly difficult to detect. The ENISA report highlights a sharp increase in BEC incidents, a trend directly fueled by these new AI capabilities.

Automated Social Engineering & Phishing: AI allows for the creation of social engineering campaigns at an unprecedented scale. This includes generating hyper-realistic phishing websites, creating fake social media profiles to build trust with targets over time, and personalizing phishing lures for thousands of individuals simultaneously.

Synthetic Media for Disinformation and Reputational Damage: Malicious actors, including state-sponsored groups, are leveraging AI to create fake news articles, doctored images, and deepfake videos designed to spread disinformation. This allows criminals to damage a company’s reputation, manipulate stock prices, or influence public opinion. The ENISA report notes that these campaigns increasingly target corporate and critical infrastructure sectors.

Identity Fraud and Impersonation: The ability to create realistic fake identities is a cornerstone of many cybercrimes. AI-driven face-swapping services can be used to attempt to bypass biometric authentication systems. Moreover, attackers can create entirely synthetic employee profiles, complete with convincing online presences, to infiltrate organizations or gain the trust of existing employees for further exploitation. Understanding Identity Management and Access Control is crucial here.

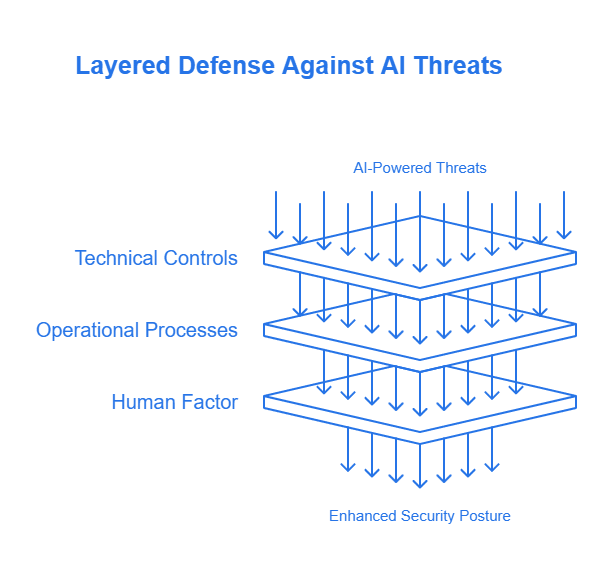

A Multi-Layered Defense: How to Protect Your Organization

Defending against AI Deepfake Threats requires a strategy that acknowledges technology as only one piece of the puzzle. Since many of these attacks exploit human trust and psychological vulnerabilities, the “human firewall”—the collective vigilance of employees—is more critical than ever. Integrating robust technical controls with continuous employee education and well-defined operational processes creates a resilient, multi-layered defense.

One crucial area is bolstering technical defenses. This includes implementing phishing-resistant Multi-Factor Authentication (MFA), as attackers can sometimes bypass traditional methods like SMS codes. Organizations should prioritize stronger forms of MFA, such as FIDO2-compliant hardware keys or robust device-based biometrics. Strengthening email security by deploying advanced solutions that use AI and machine learning to analyze email content, context, and sender behavior is also vital. Adopt Zero Trust principles to limit an attacker’s lateral movement. This ‘never trust, always verify’ approach uses network segmentation and the principle of least privilege Establishing mandatory out-of-band verification protocols for high-stakes transactions, such as large wire transfers or changes to sensitive information, using a secondary communication channel known to be legitimate, is another important technical and procedural safeguard.

The Human Firewall: Your First Line of Defense

The human element is often the primary target of AI Deepfake Threats. Continuous security awareness training is essential. Given the rapid evolution of threats, annual training sessions are no longer sufficient. Training programs must teach employees to spot the signs of a deepfake. They should look for unusual emotional cues in vishing calls, strange video artifacts, or urgent, out-of-character emails. Running realistic simulations, such as controlled phishing and vishing campaigns that mimic the latest tactics, provides practical learning experiences and helps identify areas needing further training.

Fostering a culture of healthy skepticism is crucial. Every employee must feel empowered to question unusual or urgent requests, regardless of who they appear to originate from. Leadership must actively promote an environment where it is not only acceptable but encouraged to verify high-stakes requests through a secondary channel without fear of reprisal. This cultural aspect, emphasizing vigilance and verification, is a powerful countermeasure. Effective Awareness and Training programs are key to building this human firewall. Furthermore, having a well-documented and tested Incident Response plan is critical.

Conclusion: Building Resilience in the Age of AI Deepfake Threats

The era of AI-powered cyber attacks has arrived. Deepfakes and generative AI have lowered the barrier to entry for creating highly deceptive and targeted campaigns, from sophisticated BEC emails to voice-cloned vishing calls that can deceive even cautious employees. These are not future-state threats. As reports from ENISA, Verizon, and ISMS.online show, they are active, evolving, and impacting organizations today. The potential for misuse of these technologies requires a proactive and adaptive security posture.

However, the situation is not without solutions. While AI Deepfake Threats are advanced, they are not invincible. They often rely on exploiting the same fundamental elements as traditional attacks: human trust and technical vulnerabilities. Organizations must build a resilient posture. This requires a combination of strong technical controls, continuous training, and clear verification protocols. Empowering every employee as a line of defense is the best countermeasure against today’s evolving threats. The future of cybersecurity will be defined by our ability to adapt, educate, and stay vigilant against these advanced threats.